Introduction

One of my favorite things about working at FlightAware is having the latitude to tackle problems that interest me, even when they're not directly related to my current project. Recently, a few different issues related to HTTP caching all came up around the same time, and I got the opportunity to learn a lot more about our web caching infrastructure (and web caching in general) than I ever expected. In this post I'll discuss two of the issues that arose, covering their impact, root cause, and remediation.

Background

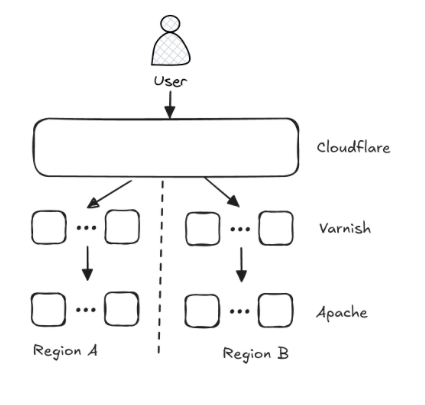

First, a brief introduction to HTTP caching to help you follow along (just the relevant bits). Broadly, there are two places where HTTP responses can be cached: your computer, and some other computer. Alright, that's an oversimplification. To be more precise, when thinking about HTTP caching as a developer you're either worried about the user's browser cache or you're worried about a caching proxy (sometimes multiple) that you control between you and the user. Both will be relevant in this post. In FlightAware's case, we have both Varnish and Cloudflare sitting in front of us, acting as two layers of caching. A diagram:

It's simple enough, we have Cloudflare fronting everything, with two regions of Varnish servers behind it, and then two regions of Apache servers behind those. If we were doing it all over again there's a good chance there would be no Varnish here, but our use of it greatly predates our use of Cloudflare, and we make extensive use of its flexible configuration via VCL.

To control how the caches behave both in the proxy servers and user's browser, we can use various HTTP request/response headers to signal what content is cacheable, for how long, etc. The most important header to call out here is the Cache-Control response header, which can contain many settings. The one you'll see referenced the most in this post is max-age=<seconds>, which indicates how many seconds a given response should be cached before it is considered stale.

Case 1: The fluctuating distance

The first case was the most complex to debug, as it had the most dependent conditions required for things to go wrong. It also required some deep research into Varnish's powerful configuration language to understand what was going on. It started as a bug report from one of our users highlighting how the flight they were viewing would periodically seem to lose some progress as its "distance flown" would sometimes temporarily creep down instead of up. It sounded like we were serving them some stale data (not a good look for a live flight tracking website)! But where was it coming from?

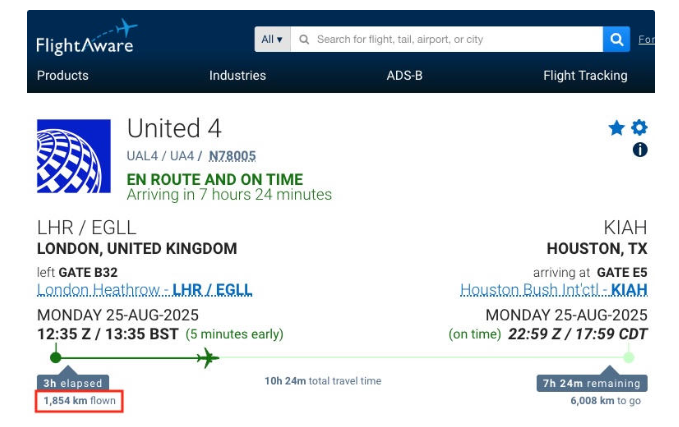

Fortunately, the problem was easy to reproduce. I just sat on a flight page with Chrome's dev tools pulled up and waited, as we periodically requested new flight data via an ajax call. Once I had the reproduction, it was time to accelerate the debug process by peeling back the outermost layer of caching (Cloudflare) and doing some manual exercising of the guilty endpoint. This quickly revealed some striking behavior:

Age indicates how long the response has been cached, it should generally not be more than max-ageWe could receive continuously stale data, upwards of 8 minutes out-of-date, even with a max-age of 60. All the other headers were in good order, with Expires and max-age set consistently with each other.

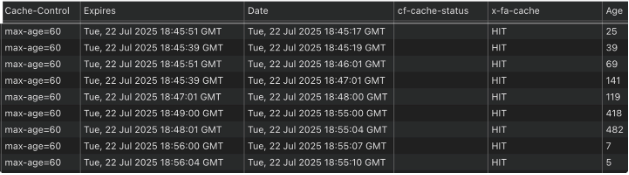

It's generally pretty tough to get HTTP caches to serve you obviously stale content, with just a couple exceptions. One such exception is the stale-while-revalidate setting of Cache-Control. This setting allows stale data to be served from the cache as long as it's less than N seconds out-of-date, and although the stale data gets served, an asynchronous request is made simultaneously to revalidate the data, either marking the existing cached data as fresh again or replacing it with actually fresh data. But we're not receiving a stale-while-revalidate value here, so what gives? It doesn't take much searching around for the keywords "varnish" and "stale-while-revalidate" to stumble upon Varnish's "Grace mode", a setting within Varnish itself that exactly mirrors the behavior of stale-while-revalidate. After expanding my vocabulary, it didn't take long to find the smoking gun in the change history of our main VCL file.

This change was part of a larger wholesale migration of our Varnish configs during an upgrade from Varnish 3 to Varnish 7. I think it's likely that the developer thought the upper block was simply being overridden by the lower one and was thus unnecessary.

To further complicate things, in this code block there are 2 different grace settings being modified, req.grace and beresep.grace. All that's important to know is that req.grace overrides beresp.grace, so by removing the upper block, we went from a grace period of 15 seconds to 10 minutes!

So a grace period of 10 minutes means that the first request within 10 minutes of a resource going stale would serve the stale data, but then every request after should be fresh, right? How were there 5 responses in a row of stale data during my experimentation? Remember the infrastructure diagram above? Behind Cloudflare sits a sizable collection of Varnish servers. Any request from a user can go to any of those servers (sorry, no sticky sessions), meaning you could get stuck hitting the grace period for one stale cache after another, ouch.

Resolving the issue was fortunately simple: remove the setting altogether. Varnish's default grace setting is 10 seconds which is close enough to our original 15. By leaving it unset, we also get the benefit of Varnish respecting any stale-while-revalidate header we choose to specify ourselves at the origin.

Case 2: What the Pragma?

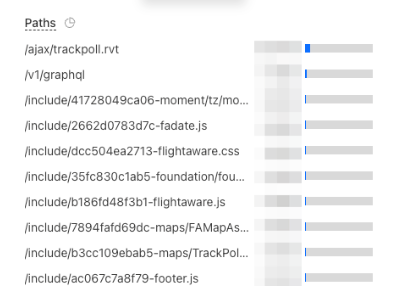

The next case was a bit more impactful than the first, to the tune of thousands of dollars a month on our Cloudflare bill. Our Operations team had recently informed us of some unexpected Cloudflare bandwidth overages that seemed to be getting worse. Although the underlying cause wasn't well understood, I went off to find some lowhanging fruit to perhaps stem the bleeding. It didn't take long to stumble across this graph:

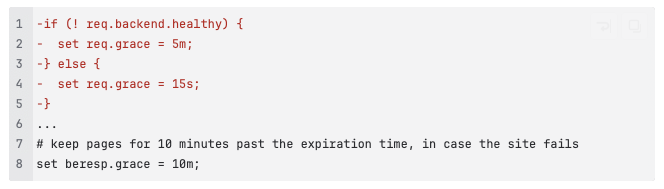

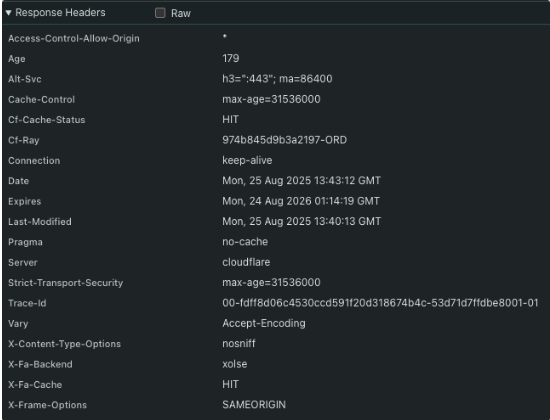

Why were 8 out of our top 10 requests simply fetching static javascript/css resources? The pattern here may be familiar: we've included hashes in the paths to each file, enabling us to set extremely long cache times on the resources while retaining the ability to push out new versions when needed by pointing to resources with new hashes. In short: requests for these paths should almost always be served by the user's own browser cache, not Cloudflare. Looking at the response headers for one of the requests shows what I expected: Cache-Control with a wildly high max-age:

However, it also revealed something else interesting. What was that Pragma: no-cache doing there? What the heck does that header even do? Well, it turns out that it depends. The Pragma header is an artifact of the HTTP/1.0 era, when we didn't have the Cache-Control header and the question of caching had a simple, binary yes/no answer. Now, though, the header is quite deprecated (as MDN clearly communicates with a big red box) and its use is discouraged. "No big deal", I thought to myself, "since it's deprecated, surely it will be overridden by any settings in the Cache-Control header". And indeed that's exactly what MDN seemed to indicate:

Note: ThePragmaheader is not specified for HTTP responses and is therefore not a reliable replacement for the HTTP/1.1Cache-Controlheader, although its behavior is the same asCache-Control: no-cacheif theCache-Controlheader field is omitted...

I had to return to that page several times and reread it to finally notice that there's more to that quote:

...in a request.

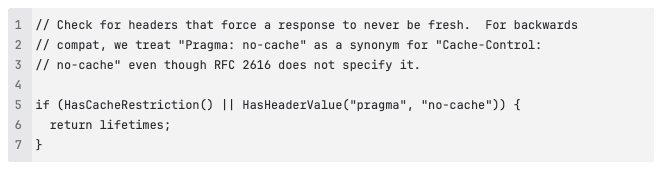

Oof! So we can have Pragma: no-cache in requests, which is overridden by the request's Cache-Control header, but we can also have Pragma: no-cache in responses, where its behavior is unspecified. Could this mean that Varnish and Cloudflare ignore the header, but Chrome doesn't? Why yes, that's exactly what it means, and here's the source to prove it:

Varnish, on the other hand, ignores it completely.

There's some nuance to why we were setting the pragma header in the first place, but the gist of it is that in some cases early on in processing a request, we'll set things up to not be cached (Expires: 0, Cache-Control:no-cache,no-store,must-revalidate,max-age=0, Pragma: no-cache; the whole 9 yards!), and then later on in the request we decide they should be cached after all, so we update the to-be-emitted Expires and Cache-Control headers appropriately, but we forgot to clear out the Pragma header. So, again, the fix was just to delete some code (specifically, the initial setting of Pragma).

Conclusion

When a website (or any piece of software) hangs around for 20 years, it manages to accrue its fair share of mysterious cruft. Lines of config, blocks of code, even comments which people are afraid to remove, lest they break some thing seemingly wholly unrelated. Let this go on for too long, though, and you end up with something that’s impossible to maintain, with cruft layered on top of cruft until you finally just have to start all over. I hope these stories help demonstrate that it doesn’t have to be that way. Computers can be understood. You can root cause bugs, fix them, and make the whole thing simpler in the process!